Import Debian or BlueOnyx 5210R CT from OpenVZ 7

Existing clients with older Aventurin{e} 6109R probably have OpenVZ 7 Containers that they want to move to Aventuri{e} 6110R, which runs Incus.

This document will explain how such a migration is performed.

Please note: This is for migrating a Debian 10/11/12/13 or BlueOnyx 5210R from OpenVZ 7 to Incus!

Older EOL BlueOnyx versions such as 5209R or Debian 9 older cannot be migrated this way.

General concept:

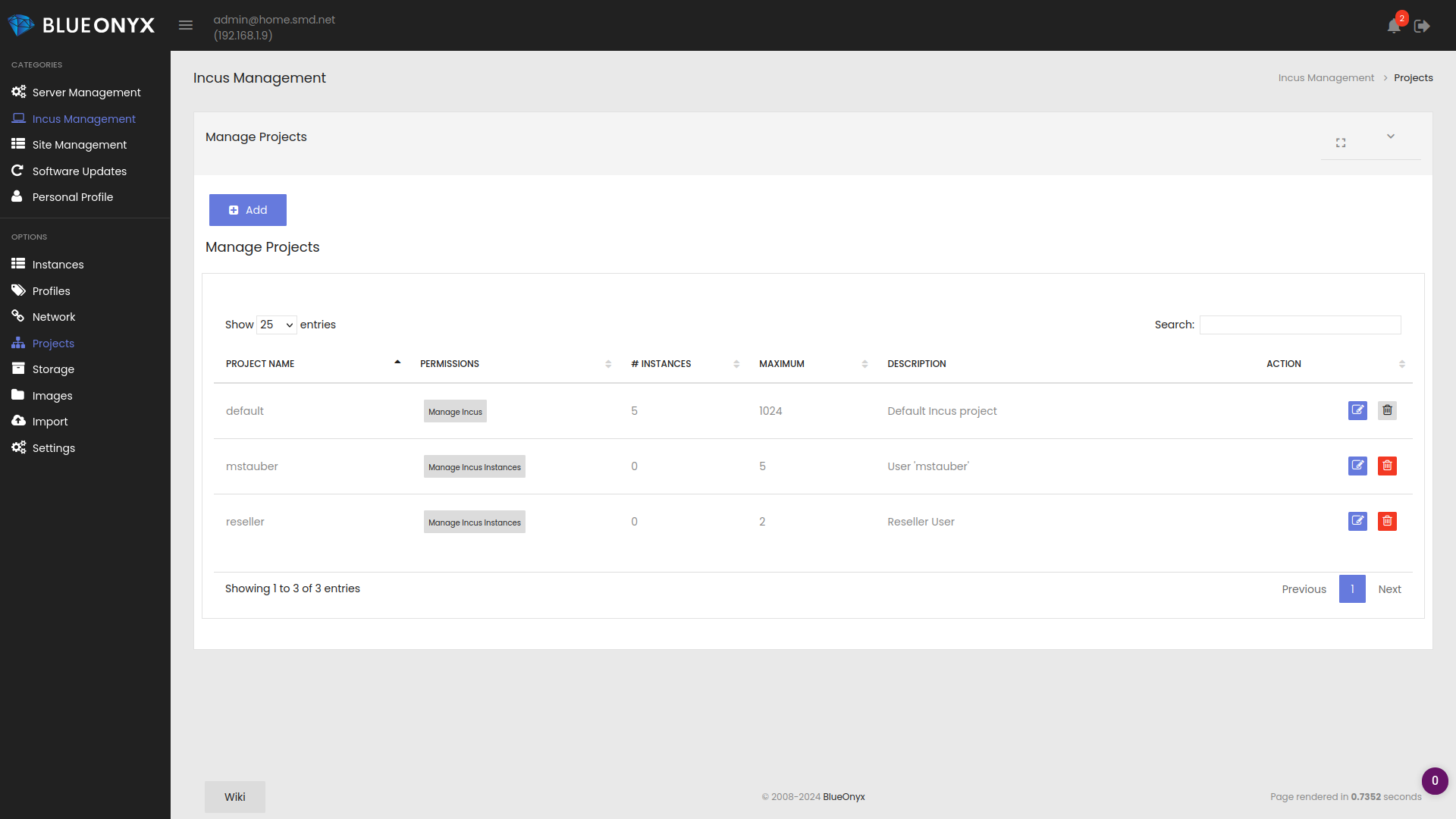

Incus has an import script that allows to import VMs or CTs or even physical servers into Incus. Either as Container or VM instance. An example of how this works with relation to a BlueOnyx 5210R CT is explained here. However: While that works, it's too much hassle and we streamlined the process further to cover the usual bases.

What we usually want to migrate from Aventurin{e} 6109R (OpenVZ 7 based) to Aventurin{e} 6110R (Incus based) are these Container types:

- Debian 10, 11, 12 or 13

- BlueOnyx 5210R

To make just these typical migrations as easy as possible, we created the script /usr/sausalito/sbin/ovz7ct_to_incus.sh

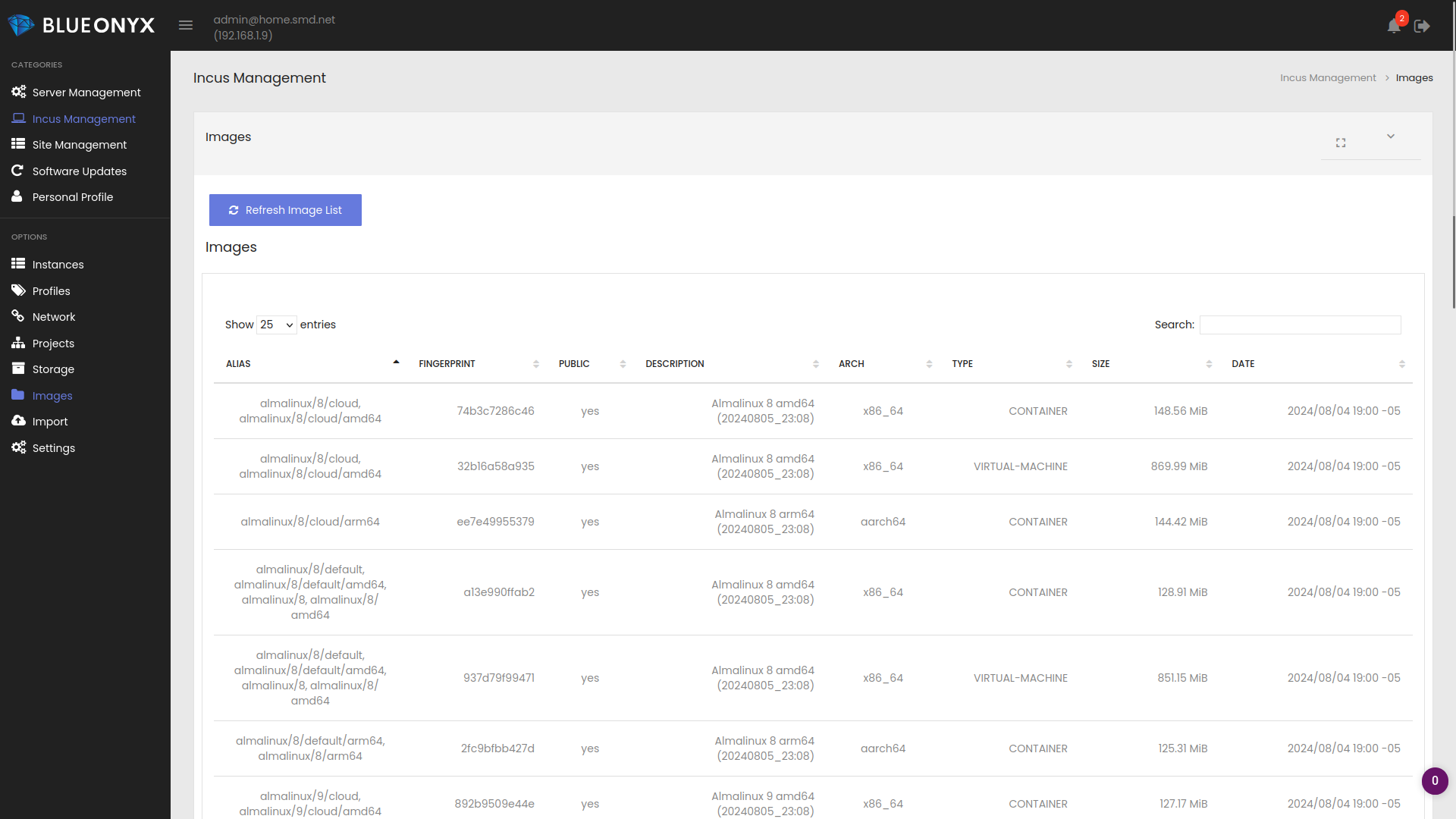

This script is already present on your Aventurin{e} 6110R and works in a "pull" fashion. It connects to the Aventurin{e} 6109R (OpenVZ 7) node and checks if the specified Container exists and if its in RUNNING or at least MOUNTED state. If so? It reads in the Container configuration. If the Container is a Debian 10, 11,12,13 or a BlueOnyx 5210R it will create an Incus container instance on the Aventurin{e} 6110R with the standard OS image from upstream.

In the next step the configuration from the OpenVZ 7 Container is applied to the Incus instance. Then an RSYNC is run to replace/update the content of the newly created Incus instance with all the files and folders from your OpenVZ 7 container that you want to import. Files on the source container are NOT modified at all.

In the final step some correctional steps will be run to modify the imported OS and its configuration to run fine in an Incus container instance. For Debian that just means removing /etc/fstab and adjusting the network configuration from venet0 to eth0. For BlueOnyx it is a hell of a lot more complicated:

- venet0 and venet0:0 get changed in CODB to "eth0" and "lo"

- IPType in CODB gets changed from "VZv4" to "IPv4"

- Network-Scripts get deleted and newly created with corrected information

- /tmp/bx_cloud_cfg gets created. If present, the network gets configured by a constructor with the data in that file.

- SSH Host-Key permissions get fixed. They may have been to weak on OpenVZ 7 CTs.

- /aquota.group and /aquota.user get deleted.

- NetworkManager gets enabled.

At the end you have a ready to run Incus Instance with the correct network configuration and all your data as it was on the OpenVZ 7 node. All that is left to do is to stop the Container on the OpenVZ 7 node and to start it on the Incus node.

Initial preparation:

As the script /usr/sausalito/sbin/ovz7ct_to_incus.sh uses SSH to connect to the OpenVZ 7 node, you must exchange SSH keys. Means: From your Incus node you must be able to SSH into the OpenVZ 7 node as "root" without password.

You can easily do so this way (if you haven't already done so) by running this on your Incus node:

~]# ssh-copy-id root@<openvz-server-name-or-ip>

Afterwards confirm that you can login as "root" to the source server without being prompted for a password:

~]# ssh root@<openvz-server-name-or-ip>

If that works, press CTRL+D or type exit to return to the target server of the migration.

Actual Migration:

On the OpenVZ 7 Node:

Make sure the Container you want to import to Incus is at least in RUNNING or MOUNTED state.

Verify the container list:

[root@zebra /]# list

NAME T STATUS HOSTNAME IP_ADDR

913 CT stopped debtest.smd.net 208.67.251.188

914 CT mounted jartest.smd.net 208.67.251.190

In our case we want to move the CT with the name 914 to Incus. It is in MOUNTED state, which is the bare minimum for this to work. It could also be in state RUNNING for the transfer. But for best data consistency it is best to put the source CT into MOUNTED state. You can do so this way:

prlctl stop <VPSID>

prlctl mount <VPSID>

Note that <VPSID> is the "NAME" in the output above. So "914" in our case.

On the Incus side:

The script /usr/sausalito/sbin/ovz7ct_to_incus.sh requires the correct command line parameters for the migration. You can find them out by running the script without parameters:

[root@incus ~]# /usr/sausalito/sbin/ovz7ct_to_incus.sh

This script can migrate OpenVZ 7 CTs (Debian 10/11/12/13 or BlueOnyx 5210R) to Incus.

Error: Missing required parameters!

Usage: /usr/sausalito/sbin/ovz7ct_to_incus.sh --vpsid <VPS_ID> --node <NODE> --port <SSH_PORT> --name <CONTAINER_NAME> --gateway <GATEWAY>

So here is what we need:

--vpsid: The OpenVZ Container NAME. In our case: 914

--node: The IP or hostname of the OpenVZ 7 node

--port: The SSH port number on the OpenVZ 7 node

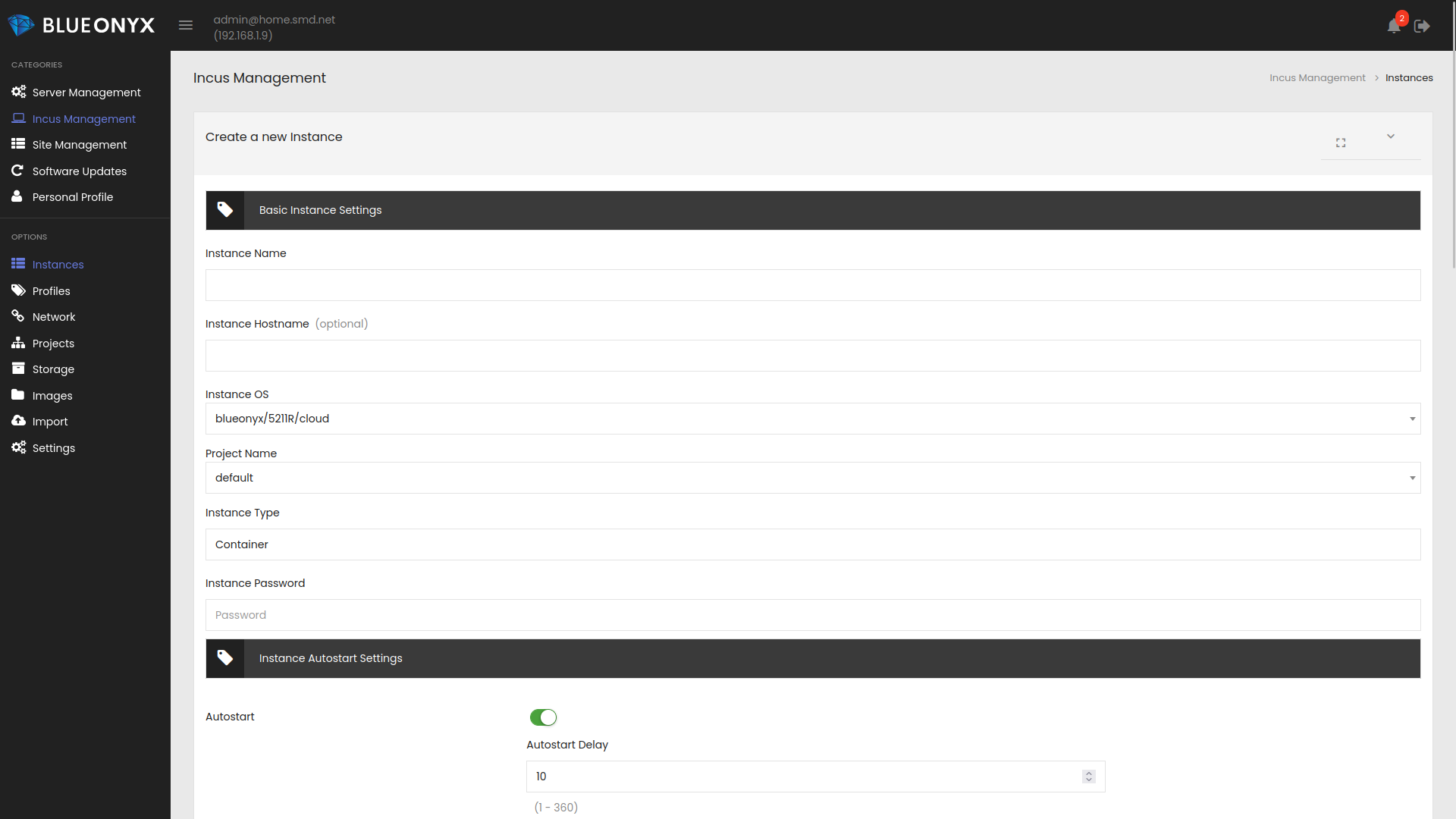

--name: The name that the new Incus CT should have after the import. This is analogue to the VPSID or NAME on OpenVZ7. Must be alphanumeric.

--gateway: This is the IPv4 gateway IP address which the Incus CT must use in order to connect to the outside world. NOT the IP of the Incus node itself, but the network. OpenVZ 7 CTs didn't need these, but Incus CTs does.

Once you have aggregated all the required information, you can run the import script like this:

/usr/sausalito/sbin/ovz7ct_to_incus.sh --vpsid 914 --node zebra.smd.net --port 22 --name jartest --gateway 208.67.251.177

Regardless if this fails for whatever reason or goes through: You can re-run the script at any time without having to delete the created Incus instance.

Example output for the import of a BlueOnyx 5210R CT to Incus:

Starting backup run for jartest...

Creating container jartest for OpenVZ CT 914...

DEBUG: Extracted CONFIG='8388608 104857600 208.67.251.190 255.255.255.0 jartest.smd.net 8.8.8.8,208.77.220.11,208.77.216.60 blueonyx 5210R 2d0ba61b-17bc-4cd2-a94e-589f30b01b38 /vz/private/2d0ba61b-17bc-4cd2-a94e-589f30b01b38/ve.conf'

Sanity Check - OS_TYPE: 'blueonyx', OS_VERSION: '5210R'

Using image blueonyx/5210R/cloud for container creation.

Container jartest already exists. Skipping creation.

Starting rsync for jartest...

Configuring network settings for jartest...

Updated /etc/networks for jartest.

Skipping /etc/network/interfaces for jartest (Non-Debian).

Fixing BlueOnyx CODB IPType settings for jartest...

Updated: /home/incus/storage-pools/default/containers/jartest/rootfs/usr/sausalito/codb/objects/1/.IPType

Completed fixing BlueOnyx CODB IPType settings for jartest.

Updated: /home/incus/storage-pools/default/containers/jartest/rootfs/usr/sausalito/codb/objects/3/.device (venet0:0 → eth0)

Updated: /home/incus/storage-pools/default/containers/jartest/rootfs/usr/sausalito/codb/objects/66/.device (venet0 → lo)

Removing OpenVZ network scripts in jartest...

Removed OpenVZ network scripts from jartest.

Creating /tmp/bx_cloud_cfg for BlueOnyx instance jartest...

Generated /tmp/bx_cloud_cfg for jartest.

Creating network configuration files for BlueOnyx instance jartest...

Generated /home/incus/storage-pools/default/containers/jartest/rootfs/etc/sysconfig/network-scripts/ifcfg-eth0.

Generated /home/incus/storage-pools/default/containers/jartest/rootfs/etc/sysconfig/network.

Setting SSH host key permissions for jartest...

Fixed permissions for /home/incus/storage-pools/default/containers/jartest/rootfs/etc/ssh/ssh_host_ecdsa_key

Fixed permissions for /home/incus/storage-pools/default/containers/jartest/rootfs/etc/ssh/ssh_host_rsa_key

Fixed permissions for /home/incus/storage-pools/default/containers/jartest/rootfs/etc/ssh/ssh_host_ed25519_key

Completed SSH key permission fixes for jartest.

Removing quota files from jartest...

Removed /home/incus/storage-pools/default/containers/jartest/rootfs/aquota.group

Removed /home/incus/storage-pools/default/containers/jartest/rootfs/aquota.user

Ensuring NetworkManager is enabled in BlueOnyx instance jartest...

Enabled NetworkManager for auto-start in jartest.

Updated NM_CONTROLLED=yes in /home/incus/storage-pools/default/containers/jartest/rootfs/etc/sysconfig/network-scripts/ifcfg-eth0

Import completed successfully for jartest.

Post-Import:

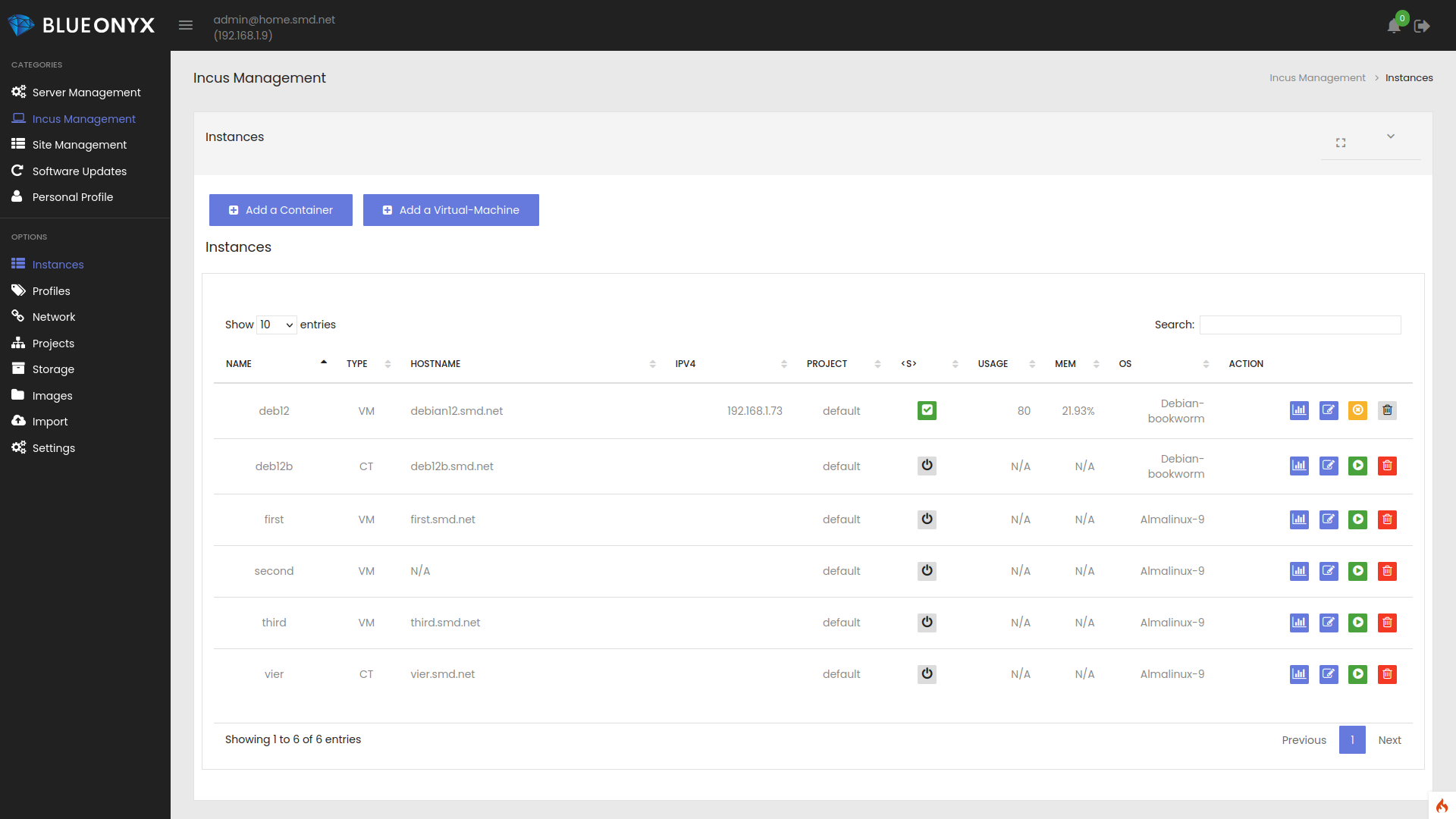

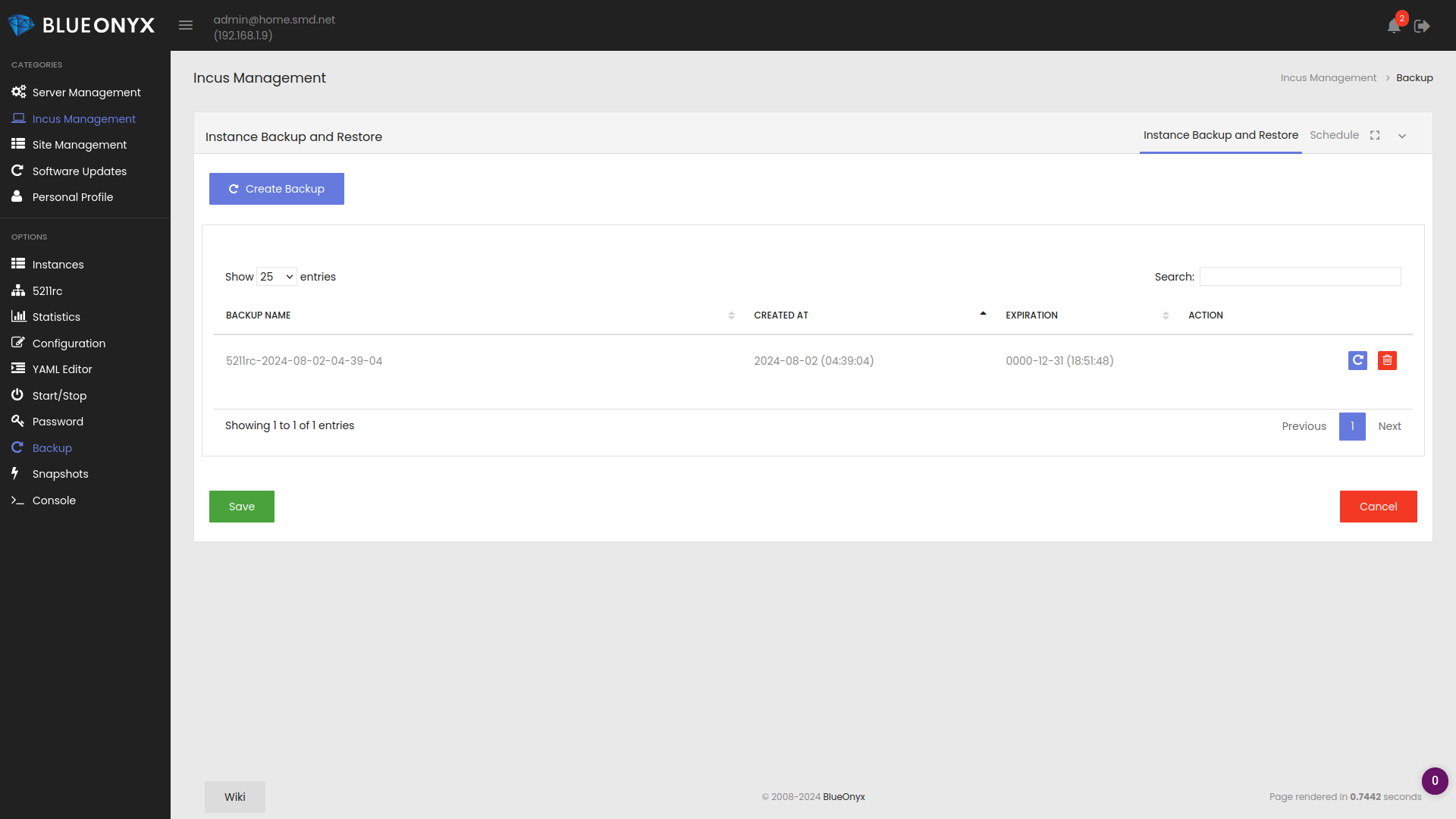

The imported Container should now show up in the Incus instance list. Both in the GUI and on the shell:

[root@incus ~]# incus list

+----------+---------+------------+-----------+-----------+

| NAME | STATE | IPV4 | TYPE | SNAPSHOTS |

+----------+---------+------------+-----------+-----------+

| jartest | STOPPED | | CONTAINER | 0 |

+----------+---------+------------+-----------+-----------+

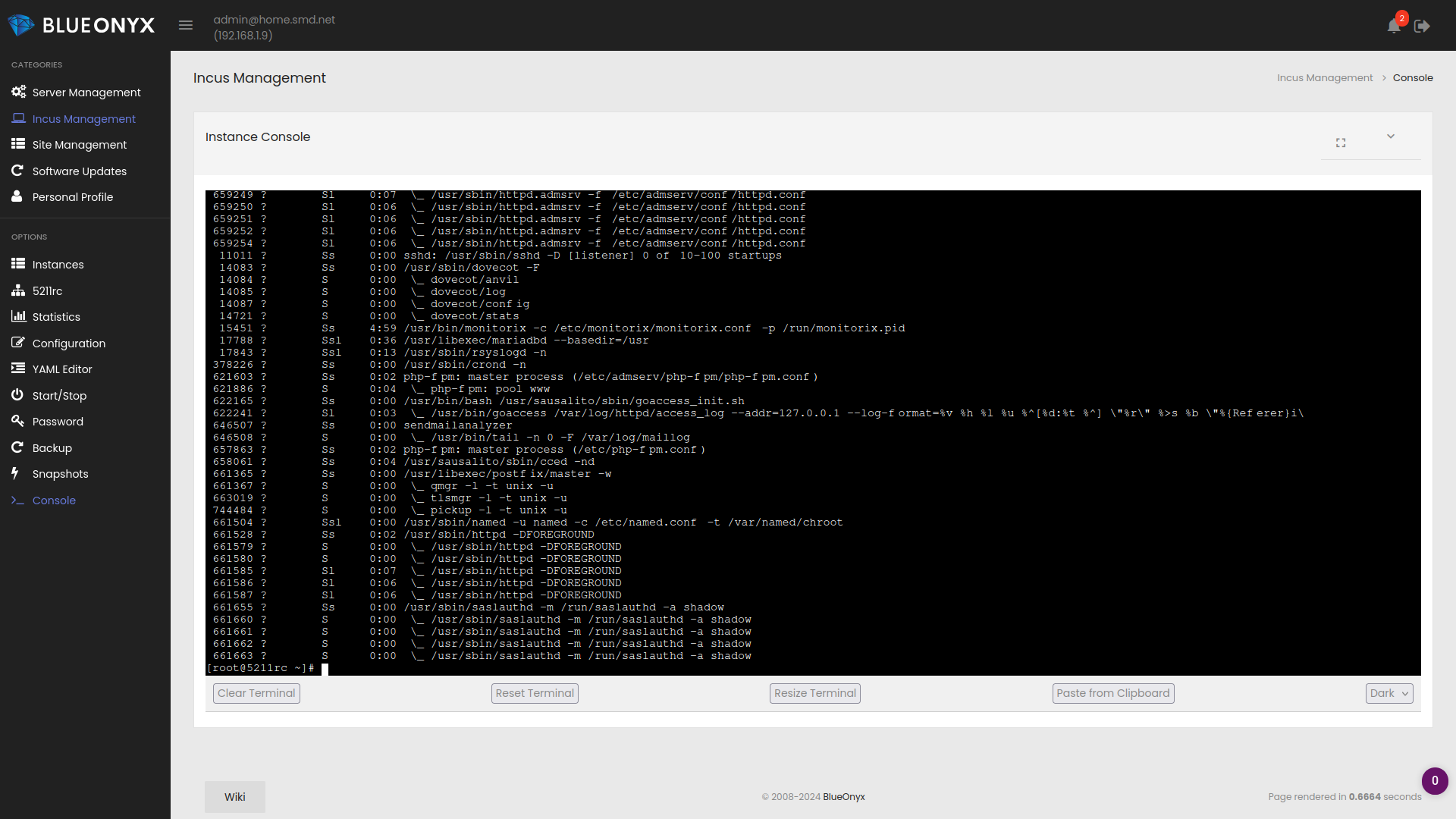

You can start it from the shell or from the GUI and then check if everything went OK.

Example:

[root@incus ~]# incus start jartest

You can then also enter the Instance to check around and see if everything worked:

[root@incus ~]# incus shell jartest

Last login: Thu Feb 16 20:43:39 -05 2023 from 192.168.131.84 on pts/1

[root@jartest ~]#

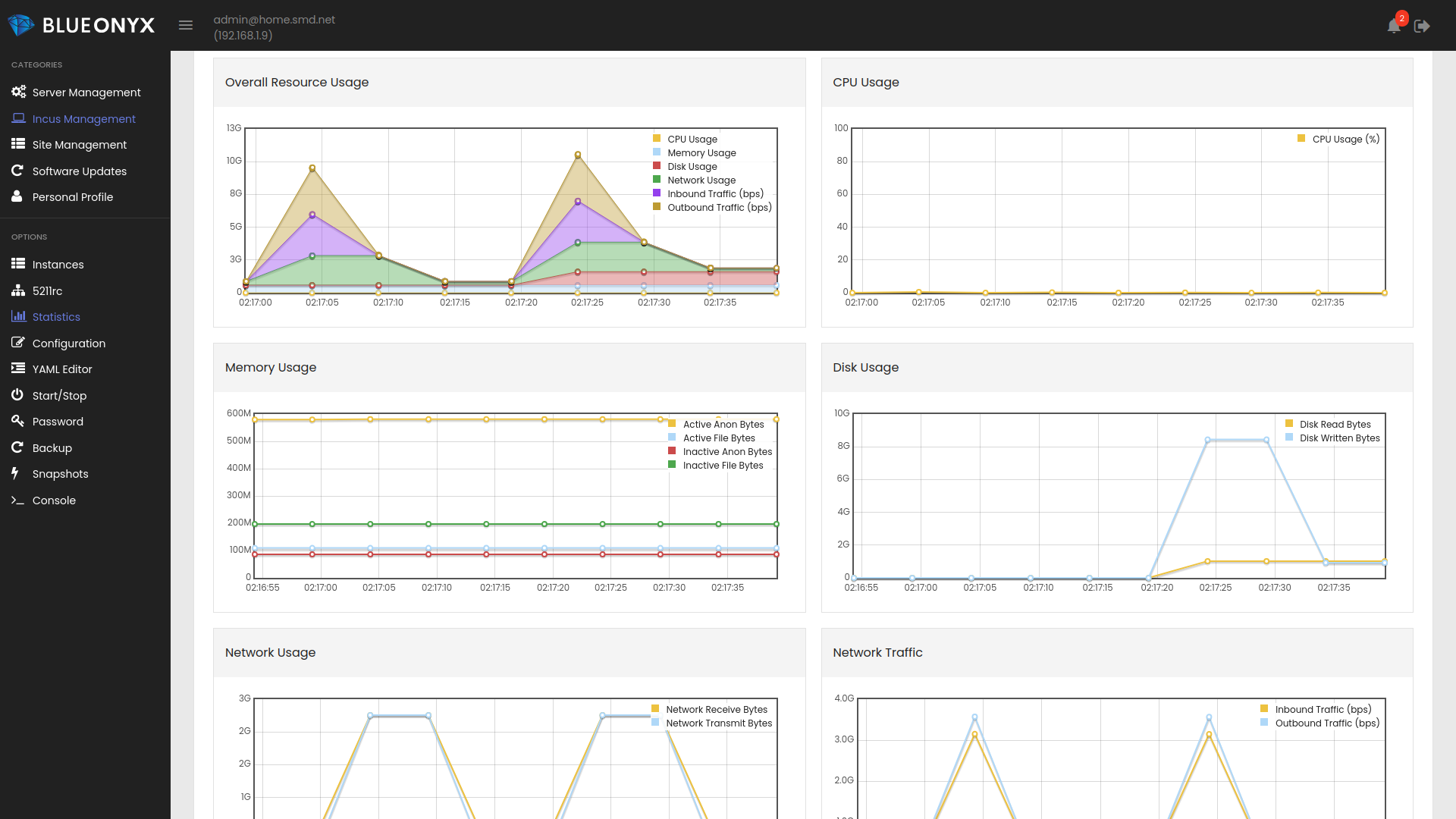

Depending on the circumstances you may have to modify your Container network settings and you also may want to enable "auto-start" or change some other configuration settings. But basically: This should be ready to run.

All done!

Your old OpenVZ 7 Container has now been successfully converted into an Incus Instance of type Container. Enjoy!